Research Interests

Our team designs and develops secure and efficient computer systems for high intelligence and automation. We research and explore security and privacy issues and solutions in various systems. We also build large-scale distributed systems for emerging applications. We have been working on the following topics (click them for more details):

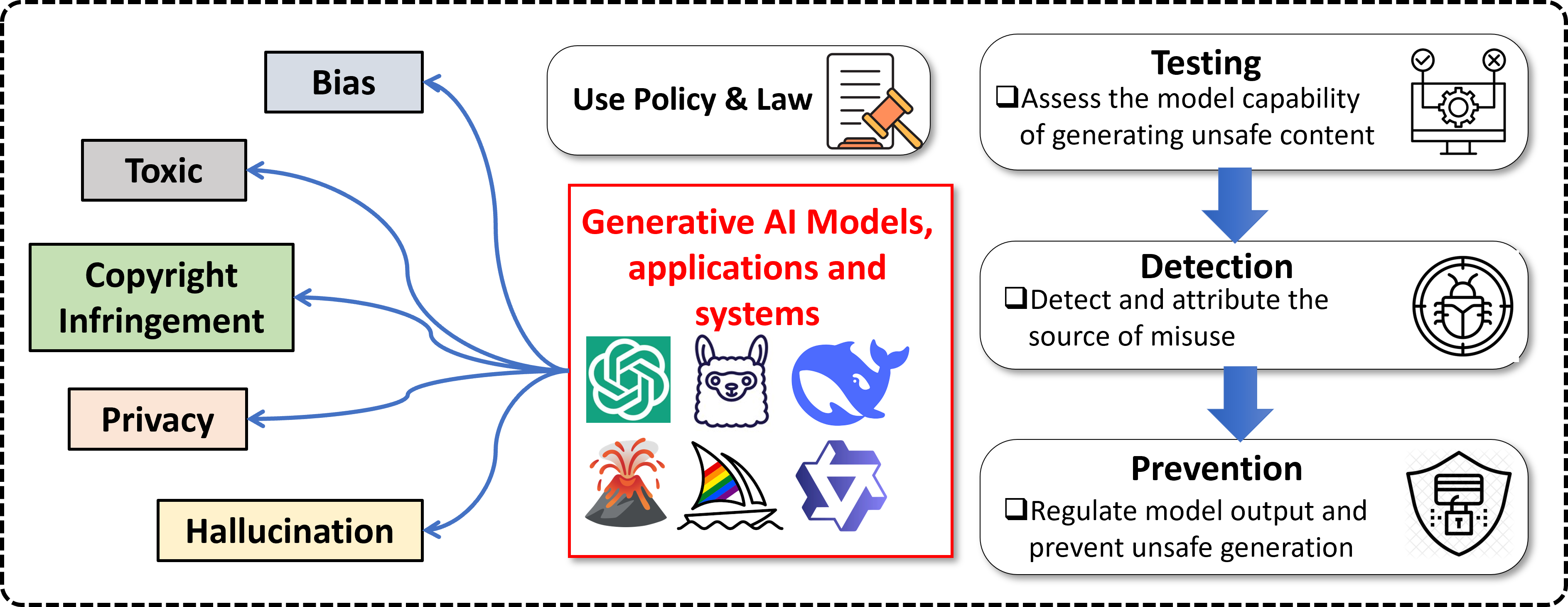

Large Generative Models have made remarkable strides in very recent years. Their widespread adoption has also raised ethical and societal concerns. We focus on the understanding and enhancement of generative AI safety from different perspectives, including vulnerability identification and categorization, safety testing and mitigation, misuse detection and tracing. We aim to build general methodologies to cover different tasks and videos (text, image, video, audio, etc.), systems and applications (AI agent, embodied AI, AI chatbot and search, etc.).

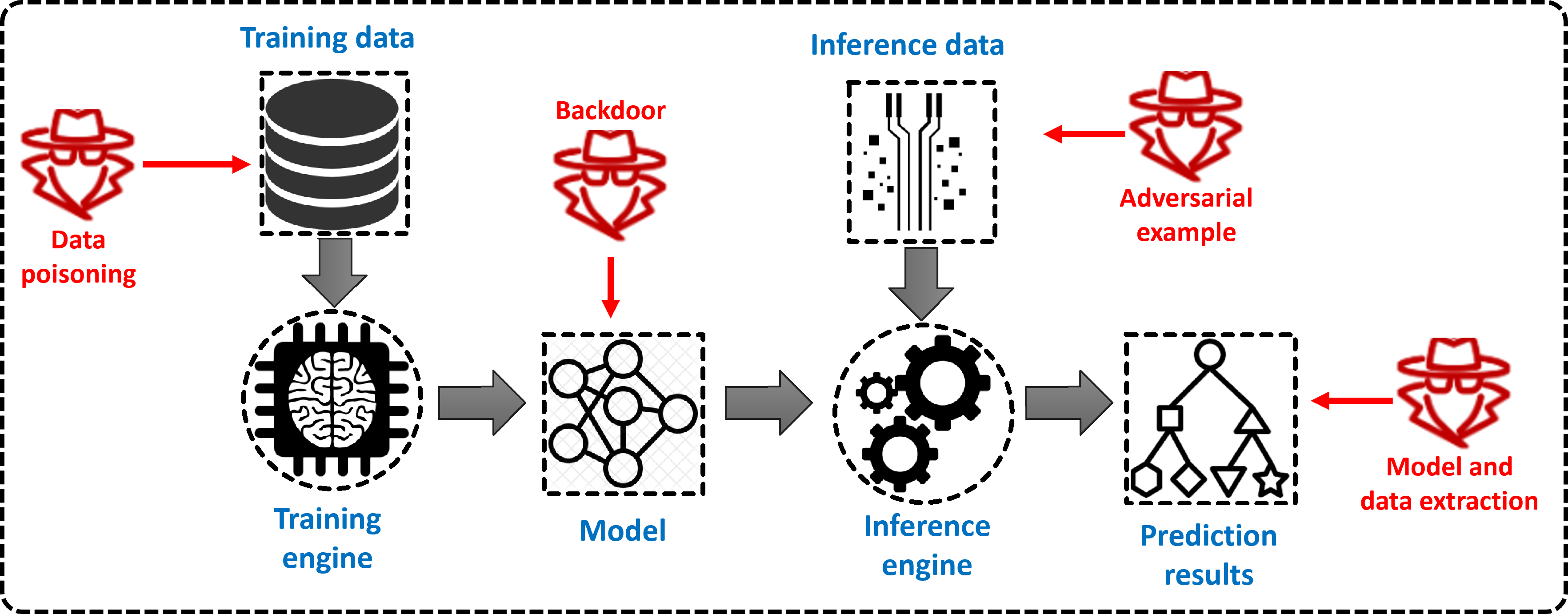

The emerging deep learning technology has been widely commercialized in our daily life. However, it also introduces new security and privacy threats, which could bring disastrous consequences in critical scenarios. Our goal is to investigate the security vulnerabilities as well as defense solutions for various deep learning systems, such as computer vision, natural language processing, reinforcement learning, distributed federated learning, etc. We are interested in the following security problems.

Benefiting from the advances in mechanics, sensors, artificial intelligence and networked systems, a variety of powerful robots have been designed to perform different tasks autonomously, ranging from space exploration to daily life housework to manufacturing. However, the complexity in the robot systems inevitably enlarges the attack surface, and brings new security challenges in the design and development of robot applications. Our group aims to explore the security problems at different layers of robotic and autonomous driving systems, and possible mitigation solutions.

Modern deep learning models are becoming more complex with huge production and deployment cost. Consequently, IT corporations, research institutes and cloud providers build large-scale GPU clusters to ease the development of DL training and inference jobs. It is important to schedule these jobs and allocate the valuable resources in an efficient and scalable way. We are interested in designing new systems and frameworks to manage, optimize and accelerate deep learning workloads in large-scale datacenters.

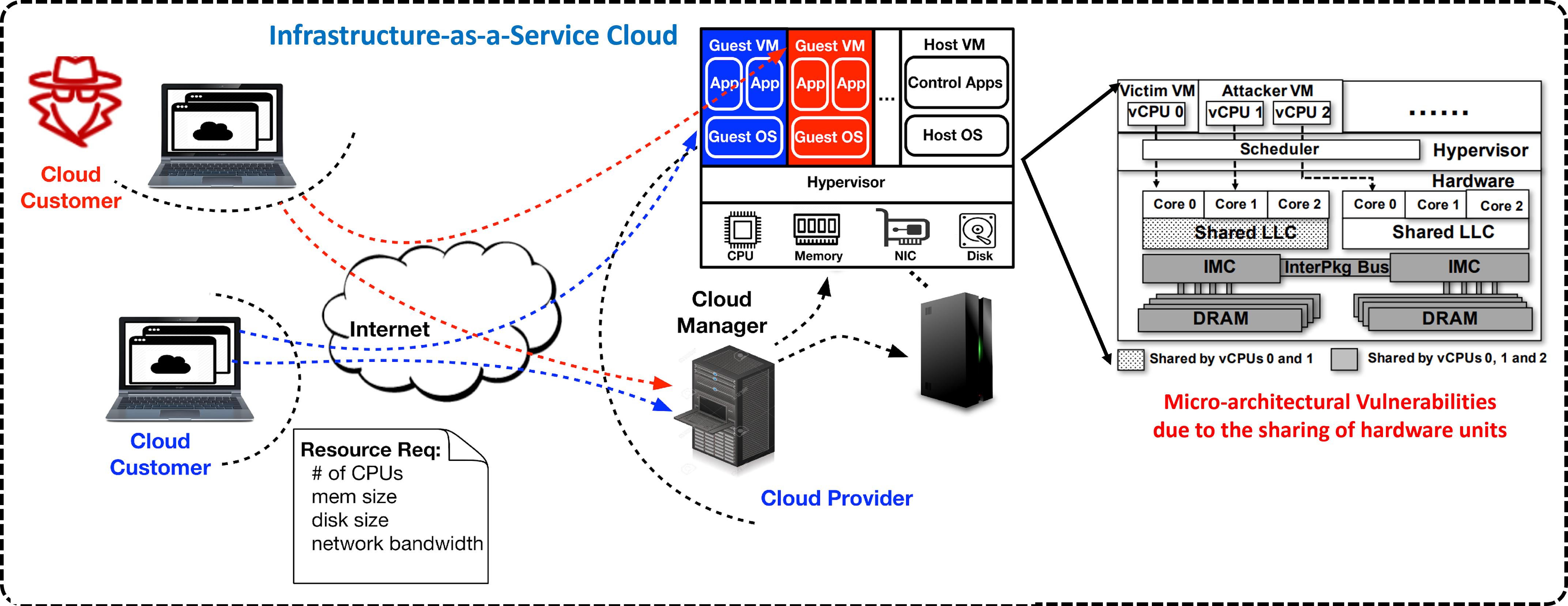

Infrastructure-as-a-Service (IaaS) clouds provide computation and storage services to

enterprises and individuals with increased elasticity and low cost. Cloud customers

rent resources in the form of virtual machines (VMs). However, these VMs may face

various security threats. We build security-aware computer architectures to attest and protect the security of cloud applications. We also design new methodologies to assess and mitigate micro-architectural side-channel attacks.

Generative AI Safety.

Deep learning security.

Robotics and autonomous driving security.

Machine learning system optimization and acceleration.

Computer architecture and cloud computing security.

Projects

- Ongoing Grants:

- [2025-2029] Co-PI, NTU-0G Joint Lab: Decentralized AI: Training, Alignment, Agents and Beyond

- [2025-2029] Co-PI, NRF CREATE: Quantum Security and Resilience for Emerging Technologies (QUASAR)

- [2025-2028] PI, AUMOVIO-NTU Corp Lab: AI-driven Development for Automotive HPC with High Efficiency and Compatibility

- [2025-2027] PI, CRPO: Security Testbed Design and Vulnerability Analysis of EV Charging Systems

- [2024-2027] Co-PI, CRPO: Secure, Private, and Verified Data Sharing for Large Model Training and Deployment

- [2024-2027] Co-PI, DTC: Combatting Prejudice in AI: A Responsible AI Framework for Continual Fairness Testing, Repair, and Transfer

- [2023-2027] Co-PI, CSA: Trustworthy AI Centre NTU (TAICeN)

- [2024-2026] PI, NRF NCR DeSCEmT: Computation-efficient and Unified Defenses against Side-channel Attacks and Integrity Attacks

- [2023-2026] Co-PI, AISG Grand Challenge: Towards Building Unified AV Scene Representation for Physical AV Adversarial Attacks and Visual Robustness Enhancement

- Completed Grants:

- [2023-2025] PI, AISG: Resource-Efficient AI “Human mesh reconstruction, Learning from small datasets, Self-supervised learning”

- [2022-2025] PI, MoE AcRF Tier2: A Framework for Intellectual Property Protection of Deep Learning Applications

- [2024-2025] PI, NTU S-Lab: Towards Performance Optimization and Improvement for Big Models

- [2020-2025] PI, NTU S-Lab: Efficient GPU Cluster Scheduler for Distributed Deep Learning

- [2019-2025] PI, Continental NTU Corp Lab: Smart Infrastructure for Data Driven Societies

- [2023-2024] PI, CSA: Securing Open-source Packages in the Software Supply Chain Through Visibility and Verification

- [2022-2024] PI, NTU Desay: A Systematic Study about the Integrity Threats and Protection of Sensory Data in Autonomous Vehicles

- [2021-2024] PI, AISG: Trustworthy and Explainable AI “Safe, Fair and Robust AI System Development, Transparent or Explainable AI System Development, Explainability and Trust (Safe, Fair, Robust) Assessment”

- [2020-2024] PI, NRF NCR CHFA: Building Security Tools for Investigating and Introspecting Applications in Trusted Execution Environment

- [2020-2024] PI, MoE AcRF Tier1 Seed: Design and Evaluation of Cyber Attacks against AI Chips

- [2020-2024] PI, MoE AcRF Tier1: Detecting and Preventing Robotic Attacks

- [2020-2023] PI, NTU Desay: A Cloud-based Framework for Protecting Autonomous Vehicles

- [2019-2023] PI, NTU SUG: General Frameworks for Quantifying and Defeating Side-channel Attacks