Deepu Rajan

Associate Professor

College of Computing and Data Science, Nanyang Technological University

| HOME | RESEARCH | TEACHING | BIOGRAPHY | LINKS |

Visual Attention Detection |

| Subspace estimation and analysis |

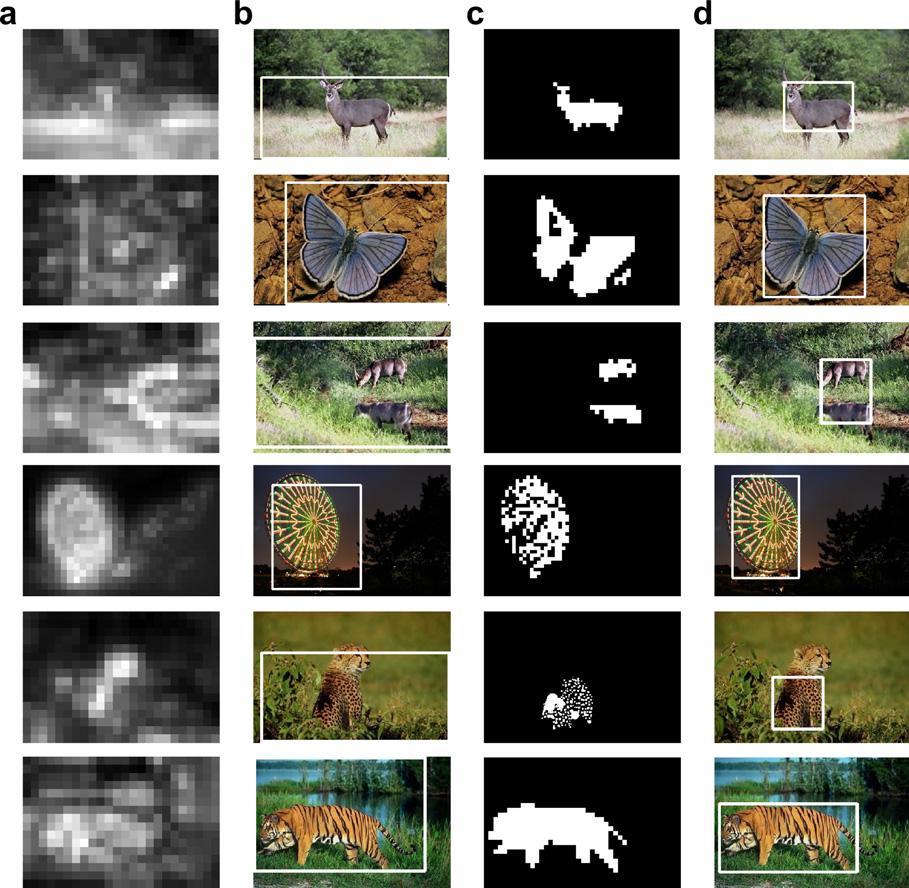

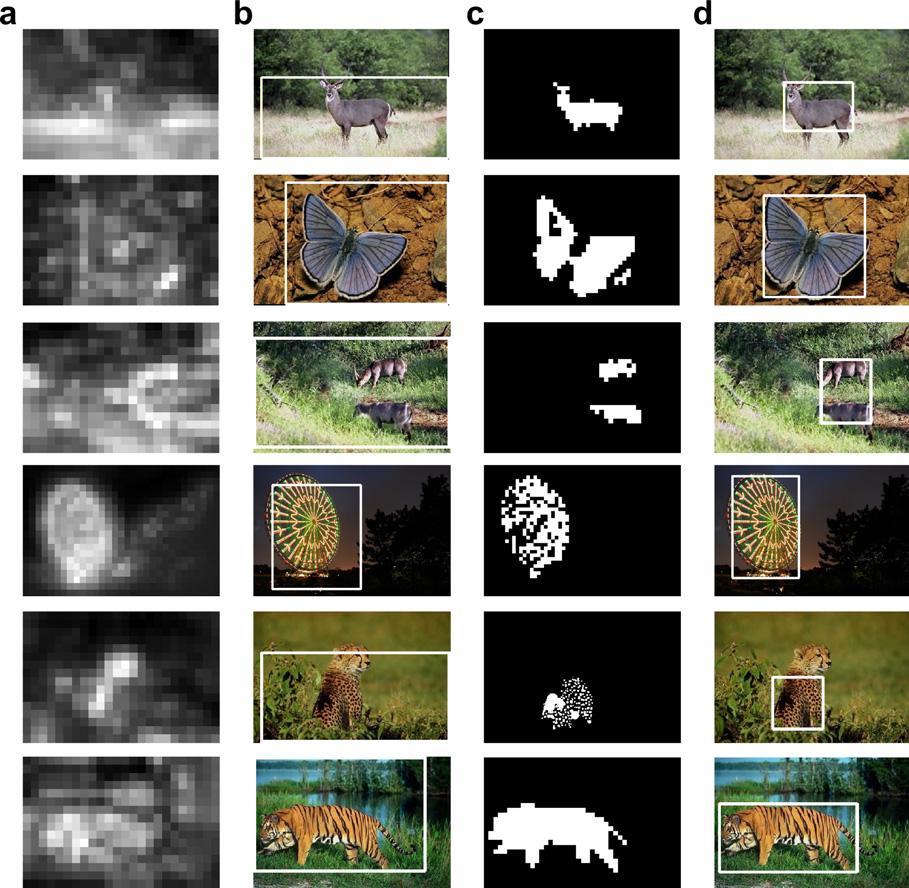

| We describe a new framework to extract visual attention regions in images using robust subspace estimation and analysis techniques. We use simple features like hue and intensity endowed with scale adaptivity in order to represent smooth and textured areas in an image. A polar transformation maps homogeneity in the features into a linear subspace that also encodes spatial information of a region. A new subspace estimation algorithm based on the Generalized Principal Component Analysis (GPCA) is proposed to estimate multiple linear subspaces. Sensitivity to outliers is achieved by weighted least squares estimate of the subspaces in which weights calculated from the distribution of K nearest neighbors are assigned to data points. Iterative refinement of the weights is proposed to handle the issue of estimation bias when the number of data points in each subspace is very different. A new region attention measure is defined to calculate the visual attention of each region by considering both feature contrast and spatial geometric properties of the regions. Compared with existing visual attention detection methods, the proposed method directly measures global visual attention at the region level as opposed to pixel level. |

|

| (a)

Saliency map from Itti et al. (PAMI98) and (b) corresponding bounding

box. (c) Attention region using subspace analysis and (d) corresponding

bounding box. |

| Attention-from-Motion |

| We introduce the notion of attention-from-motion in which the objective is to identify, from an image sequence, only those object in motions that capture visual attention (VA). Following the important concept in film production, viz, the tracking shot, we define the attention object in motion (AOM) as those that are tracked by the camera. Three components are proposed to form an attention-from-motion framework: (i) a new factorization form of the measurement matrix to describe dynamic geometry of moving object observed by moving camera; (ii) determination of single AOM based on the analysis of certain structure on the motion matrix; (iii) an iterative framework for detecting multiple AOMs. The proposed analysis of structure from factorization enables the detection of AOMs even when only partial data is available due to occlusion and over-segmentation. Without recovering the motion of either object or camera, the proposed method can detect AOM robustly from any combination of camera motion and object motion and even for degenerate motion. |